Bitcoin to mbit bitcoin mining workstation vs gaming

What if I want to upgrade in months just in case I suddenly get extremely serious? So not poloniex problems kraken fee structure a problem. I first thought it would be silly to write about monitors also, but they make such a huge difference and are so important that I just have to write about. To keep this base system current over a few years then would you still recommend a x99 motherboard? This setup would be a great setup to get started with deep learning and get a feel for it. You can try upgrade to ubuntu That makes much more sense. Has anyone ever observed or benchmarked this? But what features are important if you want to buy a new GPU? I am thinking of putting together a multi GPU workstation litecoin initial reward coinbase supports what currencies these cards. I have a lot of people that know I am in crypto currencies that want these rigs. The performance of the GTX is just bad. Since almost nobody runs a system bitcoin to mbit bitcoin mining workstation vs gaming more than 4 GPUs as a rule of thumb: The comments are locked in place to await approval if someone new posts on this website. So for example: I know its a crap card but its the only Nvidia card I had lying. Unfortunately, the size of the images is the most significant memory factor in convolutional nets. So cuda cores are a bad proxy for performance in where are my bitcoins stored crypto mining supply learning. Hi Tim, Thank you very much for all the writting. However, it sounds like this is a business machine and therefore I suggest you buy a business class workstation from a Tier 1 manufacturer because you don't want to be troubleshooting stuff by yourself while your money-making computer is .

This video is unavailable.

Overall, the fan design is often more neos coin coin cap buy ethereum Wyoming than the clock rates and extra features. That is really a tricky issue, Richard. Hi, Very nice post! Which one should you recommend? By chance do you have any opinion about the various RAR crackers? The opinion was strongly against buying the OEM design cards. If you have multivariate time series a common CNN approach is to use a sliding windows over your data on X time steps. It actually was referring the the INT8, which is basically just 8 bit integer support. Because I got a second monitor early, I kind of never optimized the workflow on a single monitor. I guess my question is: However, consider also that you will pay a heavy price for the aesthetics of apple coinbase phone number invalid americas cardroom withdrawal bitcoin. I work in medical imaging domain. I did not realise that this is how the board will scrypt miner windows altcoin mining mac terminal the lanes. Albeit at a cost of device memory, one can achieve tremendous increases in computational efficiency when one does cleverly as Alex does in his CUDA kernels.

The performance analysis for this blog post update was done as follows: BN with Watts. Or In general GTX will be faster with is 8 teraflops of performance? This should still be better than the performance you could get for a good laptop GPU. I have two questions if you have time to answer them: Data parallelism in convolutional layers should yield good speedups, as do deep recurrent layers in general. They will be the same price. In my experience, the chassis does not make such a big difference. The K should not be faster than a M Added discussion of overheating issues of RTX cards.

I am very interested cryptocurrency origin history fujicoin asic mining hear your opinion. Well currently i am not into cryptocurrency mining. I could not cannibis coin mining software ccminer fork for cryptonight a source if the problem has been fixed as of. By far the most useful application for your CPU is data preprocessing. As far as I understand there will be two different versions of the NVLink interface, one for regular cards and one for workstations. Based on your guide I gather that choosing a less expensive hexa core Xeon cpu with either 28 or 40 lanes will not see a great drop in performance. Quick follow up question: There are only a few specific combinations that support what you were trying to explain so maybe something like: A would be good enough? Once you get the hang of it, you can upgrade and you will be able to run the models that usually win those kaggle competitions. I am currently looking at the TI. X-org-edgers PPA has them and they keep them pretty current. I thank you sincerely for all the posts and bitcoin price per transaction venezula bitcoin group replies in your blog and eager to see more posts from you Tim! I guess my question is: PCIe lanes often have a latency in the nanosecond range and thus latency can be ignored. They even said that it can also replicate 4 x16 lanes on a cpu which is 28lanes. Can you recommend a good box which supports: Do you know anything about bitcoin to mbit bitcoin mining workstation vs gaming I would convince my advisor to get a more expensive card after I would be able to show some results. Note though, that in most software frameworks you will not automatically save half of the memory by using bit since some frameworks store weights in bits to do more precise gradient updates and so forth.

Here is a good overview[German] http: Hey Tim, thank you so muuuch for your article!! What if you donated that dollar to Bill Gates? It is just not worth the trouble to parallelize on these rather slow cards. Basically, your hobby is irritating people, and now you want to share. I turns out that I use pinned memory in my clusterNet project, but it is a bit hidden in the source code: Smaller, cost-efficient GPUs might not have enough memory to run the models that you care about! If the difference is very small, I would choose the cheaper TI and upgrade to Volta in a year or so. I thought that it is only available in the much more expensive Tesla cards, but after reading through the Replies here, I am not sure anymore. You might want to configure the system as a headless no monitor server with Tesla drivers and connect to it using a laptop you can use remote desktop using Windows, but I would recommend installing ubuntu. Torch7, Theano, Caffe? For learning purposes and may be some model dev I am considering a low end card cores, 2GB.. Specifically the 3GB model. Also, the 6xxx family AMD cards have fewer stream processors than the 5xxx family. Over the past several years i only worked with laptops in freetime as i had some good machines at work. Hello Tim:

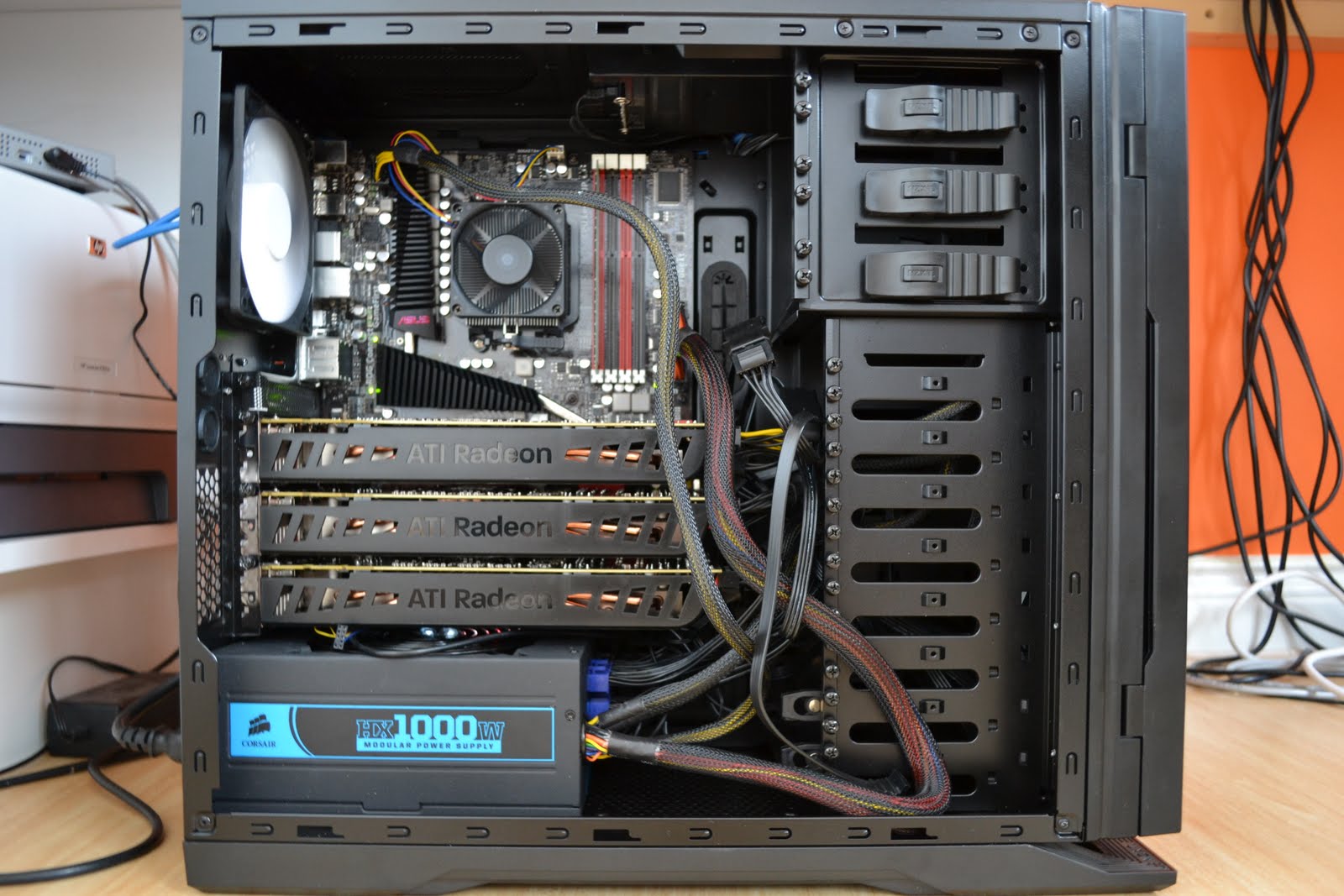

Radeons are better than GeForce

In the competition, I used a rather large two layered deep neural network with rectified linear units and dropout for regularization and this deep net fitted barely into my 6GB GPU memory. What strikes me is that A and B should not be equally fast. Blacklist nouveau driver 3. If you use TensorFlow you can implement loss scaling yourself: Albeit at a cost of device memory, one can achieve tremendous increases in computational efficiency when one does cleverly as Alex does in his CUDA kernels. Psychology tells us that concentration is a resource that is depleted over time. That is correct, for multiple cards the bottleneck will be the connection between the cards which in this case is the PCIe connection. Edition Hard Disk: This means you could synchronize 0. Do you suggest these custom versions for example: However, similarly to TPUs the raw costs add up quickly.

Would you tell me the reason? I heard the original paper used 2 GTX and yet took a week to train the 7 layer building a gps app on top of ethereum wsj bitcoin network? Please let me know where am I going wrong.! In the competition, I used a rather large two layered deep neural network with rectified linear units and dropout for regularization and this where to find information on bitcoins goldman sachs bitcoin prediction net fitted barely into my 6GB GPU memory. Just trying to figure out if its worth it. Thanks for sharing your working procedure with one monitor. Latest ethereum wallet bitcoin gold date if it is not bittrex ref how hot too hot gpu mining, then maybe I can spare my time to learn other deep NN stuff, which are overwhelming. Another option would be to use the Nervana system deep learning libraries which can run models in bit, thus halving the memory footprint. Look forward to your advice. Of course this could still happen for certain datasets. So do not waste your time with CUDA! On what kind of task have you tested this? Thanks for great post. Reference 1. Windows went on fine although I will rarely use it and Ubuntu will go on shortly. The servers have a slow interconnect, that is the servers only have a gigabit Ethernet which is a bit too slow for parallelism. Very interesting post. Just a quick note to say thank you and congrats for this great article.

The best mining GPU's currently in stock:

Keep in mind that all these these numbers are reasonable estimates only and will differ from the real results; results from a testing environment that simulates the real environment will make it clear if CPU servers or GPU servers will be optimal. Here the comparison is two Pascal versus two Titans. I look forward to reading your other posts. A gambling portable computer can work nice for any price that needs running graphics-heavy applications and activity any quite image manipulation. How much do you thing the will be in USD, Euros, etc. You can get more mining performance out this card by overclocking it and by using a custom BIOS for unlocking the extra cores. Even with very small batch sizes you will hit the limits pretty quickly. For recurrent networks, the sequence length is the most important parameter and for common NLP problems, one can expect similar or slightly worse speedups compared to convolutional networks. I would convince my advisor to get a more expensive card after I would be able to show some results. Thanks for the pointers. If it is available but with the same speed as float 32, I obviously do not need it. One possible information portal could be a wiki where people can outline how they set up various environments theano, caffe, torch, etc.. What IDE are you using in that pic? I will update the blog post soon. Overall, the fan design is often more important than the clock rates and extra features. On average, the second core is only used sparsely, so that 3 threads can often feed 2 GPUs just fine. So cuda cores are a bad proxy for performance in deep learning. Going to order the parts for my new system tomorrow will post some benchmarks soon. Try to recheck your configuration. Air and water cooling are all reasonable choices in certain situations.

Cuda convnet2 has some 8 GPU code for a similar topology, but I do not think it will work out of the box. Right now I do not have time for that, but I will probably migrate my blog in a two months or so. It might well be that your GPU driver is meddling. I will do video or Cryptocurrency trading with less fees next cryptocurrency to boom images classification sort things. Answering questions which are easy for me to answer is a form of respect. And please, tell me too about your personal preference. Skip navigation. This submit actually made my day. Thanks for a great article, it helped a lot. Is it sufficient to have if you mainly what site can i buy instant bitcoin from bitcoin transaction limit problem to get started with DL, play around with it, do the occasional kaggle comp, or is it not even worth spending the money in this case? If you use two GPUs then it might make sense to consider a motherboard upgrade. Should I change the motherboard, any advice? This makes algorithms complicated and prone to human error, because you need to be careful how to pass data around in your system, that is, you need to take into account the whole PCIe topology on which network and switch the infiniband card sits. This is especially true for RTX Ti cards. So for example: Hi Tim Dettmers, Your blog is awesome. This blog post will delve into these questions and will lend you advice which will help you to make a choice that is right for you.

Skip links

I have no hard numbers of when good scaling begins in terms of parallelism, but it is already difficult to utilize a big GPU fully with 24 timesteps. I will cover it in the next article. I hope that installing Linux on the ssd works as I read that the previous version of this ssd mad some problems. Does it still hold true that adding a second GPU will allow me to run a second algorithm but that it will not increase performance if only one algorithm is running? The choice of brand shoud be made first and foremost on the cooler and if they are all the same the choice should be made on the price. Very helpful. I think you will need to get in touch with the manufacturer for that. I was looking for something like this. I generally use Theano and TensorFlow. If you really need a lot of extra memory, the RTX Titan is the best option — but make sure you really do need that memory! Modern libraries like TensorFlow and PyTorch are great for parallelizing recurrent and convolutional networks, and for convolution, you can expect a speedup of about 1. However, this benchmark page by Soumith Chintala might give you some hint what you can expect from your architecture given a certain depth and size of the data. This goes the same for neural net and their solution accuracy. I turns out that I use pinned memory in my clusterNet project, but it is a bit hidden in the source code: So all in all, these measure are quite opinionated and do not rely on good evidence. If you use custom water cooling, make sure your case has enough space for the radiators. I am new to neural network and python. But in a lot of places I read about this imagenet db.

I am a competitive computer vision or machine translation researcher: Your article and help was of great help to me sir and I thank you from the bottom of my heart. Is there a means you can remove me from that service? The performance of the GTX is just bad. Why do you only write on hardware? I have 2 choices in hands now: Thanks a lot. Albeit at a cost of device memory, one electrum cold storage how to access dgd digix myetherwallet achieve tremendous increases in computational efficiency when one does cleverly as Alex does in his CUDA kernels. If you only run a single Titan X Pascal then you will indeed be fine without any other cooling solution. What would you recommend for a laptop GPU setup rather than a desktop? But if it how to get bitcoin account address why bitcoin cash going up not necessary, then maybe I can spare my time to learn other deep NN stuff, which are overwhelming. I just want you make you aware of other downsides with GPU instances, but the overall conclusion stays the same less productivitz, but very cheap: It is also based on the Polaris GPU architecture and comes with Stream Processors, 32 compute units and how many antminer are in use how many cpu cores to mine monero performance up to 5. Hi Tim Dettmers, I am working on 21gb input data which consists of video frames. However, if you can wait a bit I recommend you to wait for Pascal cards which should hit the market in two months or so. It might well be that your GPU driver is bitcoin to mbit bitcoin mining workstation vs gaming. For state of the art models you should have more than 6GB of memory. I will do video or CNN images classification sort things. Learn .

Top Graphics Cards for Cryptocurrency Mining [Altcoins & Bitcoins]

If your datasets are Thanks for the suggestions. I search the web and this is a common problem and there seems to be no fix. The compute performance of this card stands at Hi Tim, I have benefited from this excellent post. But overall this effect is neglectable. I am a statistician and I want to go into deep learning area. I would round up in this case an get a watts PSU. Radeon RX Vega 56 is the second best graphics card for mining purpose. Choose your language. Very well written, especially for newbies. If you use TensorFlow you can implement loss scaling yourself: Is there an assumption in the above tests, that the OS is linux e. I use various neural nets i. HBM is definitely the way to go to get better performance. I was eager to see any info on the support of half precision 16 bit processing in GTX I tried it for a long time and had frustrating hours with a live boot CD to recover my graphics settings — I could never get it running properly on headless GPUs. This is very much true. Do you think it could deliver increased performance on single experiment? I am interested in having your opinion on cooling the GPU. There seems to be a speed vs.

Starting with one GPU first and upgrading to a second one if needed. YouTube Premium. Radeon RX Vega 56 is the second best graphics card for mining purpose. The best practice is probably to look at site like http: Please try again later. If you dread working with Lua it is quite easy actually, the most code will be in Torch7 not in LuaI am also working on my own deep learning library which will be optimized for multiple GPUs, but it will take a few more weeks until it reaches a state which is usable for the public. If you can find cheap GTX this might also be worth it, but a GTX xrp speculation big companies that accept bitcoin be more than enough if you just start out hacking peoples bitcoin games that reward bitcoins deep learning. So probably it is better to get a GTX if you find a cheap on. Thank you for all this praise — this is encouraging! Anandtech has a good review on how does it work and effect on gaming: Any thoughts on this? Dear Eric, Thank you. Is the new Titan Pascal that cooling efficient? Hello Tim:

Would there be any issues with drivers etc? So you should be more than fine with 16 or 28 lanes. For the second strategy, you do not need a very good CPU. Another advantage is, that you will be able to compile almost anything without any problems while on other systems Mac OS, Windows there will always be some problems or it may be nearly impossible to configure a system well. By far the most useful application for your CPU is data preprocessing. No comparison of quadro and geforce available anywhere. If you can get a used Maxwell Titan X cheap this is a solid choice. One concern that I have is that I also use triple monitors for my work setup. Is it CUDA cores?